Why ChatGPT and LLMs Are Professional Tools, Not Shortcuts: Drawing the Line That Actually Matters

Meta Description: A maternal-fetal medicine physician and developer addresses concerns about AI “legitimacy” in healthcare, distinguishing professional tool use from academic dishonesty and explaining why LLM-enhanced medical communication improves patient outcomes.

Let’s Talk About the “C” Word: Cheating

I’ve noticed something interesting in physician circles lately: there’s significant hand-wringing about whether using ChatGPT or other LLMs constitutes “cheating.” Colleagues express buyer’s remorse after using AI for clinical summaries. Others worry that AI-assisted documentation is somehow illegitimate or represents a shortcut that compromises professional integrity.

Here’s what I need to say upfront: Yes, using AI can absolutely be cheating—but context is everything.

If a 12-year-old uses ChatGPT to write their English essay, that’s cheating. If someone pumps out AI-generated slop for social media engagement without disclosure or value-add, that’s unethical. If a medical student uses AI to answer board questions they’re supposed to solve independently, that’s academic dishonesty.

But here’s what I’m actually talking about: using LLMs to improve medical communication, enhance documentation accuracy, and ultimately improve patient outcomes. That’s not cheating—that’s professional evolution.

As both a practicing maternal-fetal medicine specialist and the founder of CodeCraftMD, I’ve spent considerable time in the trenches with LLMs. And I’m here to tell you—unequivocally—that we need to stop conflating professional tool use with academic dishonesty. The distinction matters enormously.

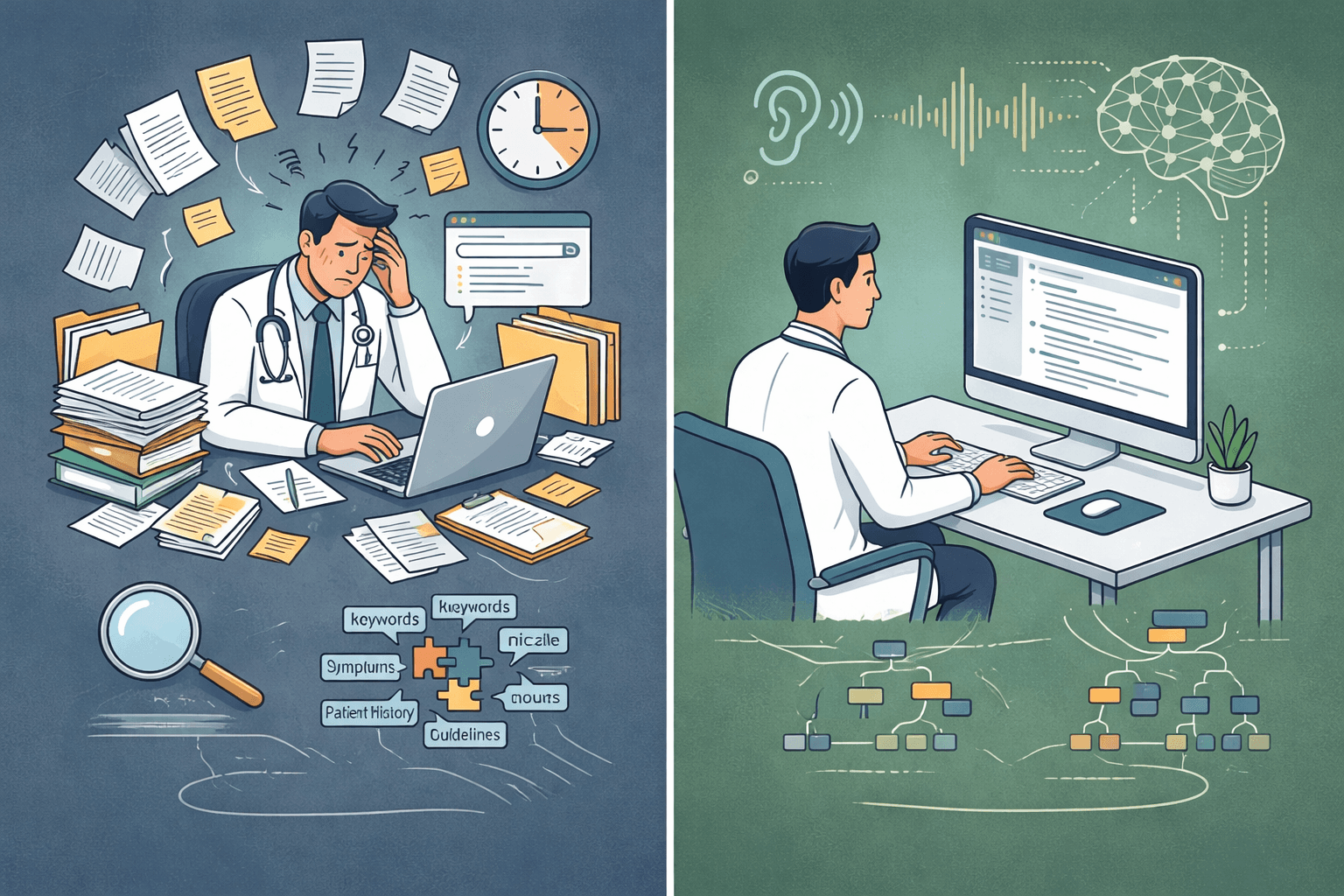

The Real Paradigm Shift: Structure Over Chaos

Let me be direct: I would rather have a well-structured, logically organized LLM-assisted clinical note than the typical fragmented documentation hastily constructed during a busy clinic day when cognitive fatigue has already set in.

Think about how we typically document complex encounters in clinical practice:

- We Google fragments of guidelines under time pressure

- We piece together disparate recommendations from multiple sources

- We rely on pattern recognition from our limited individual experience

- We transcribe patient encounters from memory hours later, inevitably losing nuance

- We produce inconsistent documentation quality that varies with our energy levels

Now contrast that with what a properly deployed LLM can do:

- Synthesize comprehensive information instantaneously

- Apply logical reasoning frameworks consistently

- Organize complex information hierarchically

- Maintain context across an entire patient encounter

- Generate structured documentation in real-time while preserving clinical accuracy

The difference isn’t incremental—it’s categorical.

Drawing the Line: Where “Cheating” Actually Applies

Let’s be crystal clear about what I’m NOT advocating:

Not This: Academic Dishonesty

- Students using AI to complete assignments meant to develop their own thinking

- Trainees using AI to answer questions designed to assess their knowledge

- Anyone using AI to claim credit for work they didn’t intellectually engage with

Not This: AI Slop

- Pumping out generic, unreviewed content for social media

- Using AI to generate volume without adding value

- Creating misleading content that implies human authorship when there was none

Yes This: Professional Tool Use

- Using AI to structure complex medical information more effectively

- Leveraging AI to reduce documentation burden so you can focus on patient interaction

- Employing AI to enhance communication accuracy and completeness

- Utilizing AI to improve patient education materials

The fundamental question isn’t “Did AI touch this?” It’s “Does this improve patient care while maintaining professional standards?”

Beyond Superficial Summaries: The Logic Layer

The common criticism of LLMs assumes they’re simply fancy search engines that regurgitate disconnected facts. This was perhaps true for early iterations, but it completely misses what makes modern LLMs revolutionary: the logic layer.

In my MFM practice, I deal with complex cases daily—multiple gestations, fetal anomalies, maternal comorbidities requiring multidisciplinary coordination. The challenge isn’t accessing information (I have UpToDate, PubMed, ACOG guidelines all at my fingertips). The challenge is:

- Synthesis: Integrating multiple data streams into coherent clinical narratives

- Prioritization: Determining what matters most in a sea of information

- Contextualization: Applying general knowledge to specific patient scenarios

- Documentation: Creating comprehensive, organized records that capture nuanced decision-making

This is where LLMs excel—not as databases, but as reasoning engines that impose structure on complexity.

And here’s the critical point: I’m not outsourcing medical judgment; I’m augmenting organizational capacity.

Real-World Application: Ambient Documentation

Let me give you a concrete example from my workflow that illustrates why this isn’t “cheating”—it’s professional excellence.

The Traditional Approach:

- 45-minute consultation with complex patient (monochorionic twins with selective growth restriction)

- Mental note-taking throughout while trying to maintain rapport

- Post-visit: 20-30 minutes reconstructing the encounter from memory

- Hunt through patient chart for relevant history

- Pull up guidelines to reference appropriate recommendations

- Type everything into EHR while trying to remember exact counseling provided

- Result: Incomplete documentation, mental fatigue, delayed charting, lost clinical nuance

The LLM-Enhanced Approach:

- Same 45-minute consultation, but ambient AI captures the actual conversation

- My full attention remains on the patient—not divided between listening and mental documentation

- Post-visit: Review AI-generated structured summary that captured what actually happened

- Edit for accuracy and add clinical judgment elements (2-5 minutes)

- Result: More accurate documentation, preserved cognitive energy, real-time fidelity, better patient experience

Now ask yourself: Which approach better serves the patient? Which produces more accurate documentation? Which allows the physician to be more present during the encounter?

The LLM isn’t replacing my medical expertise—it’s handling the cognitive load of organization and recall so I can focus on the actual medicine and human connection.

If that’s cheating, then so is using a stethoscope instead of pressing your ear to a patient’s chest.

The Superiority of Structured Intelligence

Here’s what critics miss: in professional contexts, especially healthcare, structure IS intelligence.

A logically organized clinical note that:

- Presents differential diagnoses hierarchically

- Groups related findings systematically

- Prioritizes interventions by evidence level

- Documents decision-making transparently

- Captures the actual patient encounter accurately

…is inherently superior to fragmented, memory-dependent documentation, regardless of whether AI assisted in its creation.

The question isn’t “Is this AI-generated?” The question is “Is this accurate, comprehensive, clinically sound, and does it improve patient care?”

What LLMs Actually Excel At (And Why It’s Not Cheating)

Let’s be specific about where LLMs provide genuine value in clinical practice:

1. Pattern Recognition at Scale

LLMs identify connections across vast datasets that individual physicians might miss, especially under time pressure or cognitive fatigue. In my CodeCraftMD work with ICD-10 coding, the AI identifies relevant diagnostic codes by recognizing patterns in clinical language—not replacing my medical judgment about diagnoses, but improving the accuracy and completeness of billing documentation.

2. Consistent Application of Logic

Humans get tired, distracted, and cognitively depleted. LLMs apply reasoning frameworks consistently. They don’t skip documentation steps because it’s the 12th patient of the day. This isn’t cheating—it’s quality control.

3. Comprehensive Contextualization

Modern LLMs maintain context across long conversations or documents. They “remember” what was discussed 30 minutes ago in a consultation and incorporate it into current recommendations. Again—this isn’t replacing medical memory; it’s creating accurate records of what actually occurred.

4. Adaptive Communication

Need a patient handout at 8th-grade reading level? A detailed technical summary for a consulting specialist? A billing justification for insurance? LLMs can generate all three from the same clinical encounter, each appropriately tailored. I review and approve each version—but the AI handles the translation work.

None of this is cheating. It’s using tools to improve communication and patient outcomes.

Addressing the Skepticism: The Cheating Concern

I understand why the “cheating” concern exists. As physicians, we’ve spent decades in training environments where using others’ work without attribution was academic dishonesty. We were tested individually, and using unauthorized assistance was explicitly forbidden.

But professional practice is fundamentally different from academic training:

In Training: The goal is to develop YOUR individual capability. Using shortcuts undermines that development. This is where “cheating” truly applies.

In Practice: The goal is optimal patient care. Using tools that improve accuracy, efficiency, and communication serves that goal. This isn’t cheating—it’s professional competence.

Let’s address the common objections directly:

“You’re not doing the work yourself”

I am doing the work—the important work. The medical reasoning, clinical judgment, patient interaction, and decision-making are entirely mine. The AI handles documentation structure and organizational tasks. Do you think radiologists are “cheating” because they use digital imaging instead of developing X-ray film by hand?

“You can’t trust AI”

Correct—you shouldn’t trust it blindly. But you also shouldn’t blindly trust your own memory at 11 PM when you’re charting your 20th patient. The solution isn’t to avoid AI; it’s to use it as a sophisticated tool that requires verification, just like any other clinical instrument. I review every AI-generated note. I add clinical judgment. I ensure accuracy. That’s professional responsibility, not cheating.

“It’s not authentic”

What’s authentic: documentation that accurately captures what happened in a patient encounter, or documentation that’s a degraded, fatigued reconstruction hours after the fact? LLM-assisted ambient documentation is MORE authentic because it captures the actual conversation, not my imperfect memory of it.

“Patients won’t like it”

Actually, patients appreciate when I’m fully present during the consultation rather than typing into a computer. They appreciate receiving detailed, well-organized written summaries of our discussion. They benefit from handouts tailored to their health literacy level. When I tell patients I use AI to improve documentation accuracy, they’re universally supportive.

The Physician-Developer Perspective

Here’s where my dual identity as clinician and developer gives me unique insight: I understand both the clinical requirements AND the technical capabilities. Most concerns about “cheating” stem from:

- Misunderstanding the use case – Conflating academic contexts (where independent work is required) with professional contexts (where optimal outcomes are required)

- Not understanding capabilities – Judging AI by what it could do in 2020 rather than what it can do now

- Comparing AI to idealized standards – Comparing AI-assisted work to perfect human performance rather than to actual human performance under real-world constraints

When I build tools like CodeCraftMD, I’m not trying to replace physician expertise or help anyone “cheat” their way through medical practice. I’m trying to handle the 60% of cognitive work that’s pure administrative burden so physicians can focus on the 40% that requires genuine medical judgment and human connection.

That’s not cheating. That’s efficiency in service of better patient care.

The Patient Outcome Argument

Let me be even more direct: If using LLMs improves patient outcomes, then NOT using them becomes the ethical concern.

Consider these measurable impacts I’ve seen in my own practice:

Documentation Accuracy

- AI-assisted notes capture details I would have forgotten

- Medication dosages, patient questions, and counseling points are recorded verbatim

- Result: Fewer documentation errors, better continuity of care

Patient Communication

- AI helps generate patient summaries in clear, accessible language

- Complex genetic counseling becomes comprehensible patient education materials

- Result: Better patient understanding, improved compliance, fewer follow-up confusion calls

Cognitive Preservation

- Reduced documentation burden means less burnout

- Mental energy preserved for complex clinical decision-making

- Result: Better clinical judgment when it matters most

Time Efficiency

- Documentation time reduced by 40% without sacrificing quality

- Time savings redirected to patient interaction or professional development

- Result: Better patient experience, less physician burnout

If these outcomes matter—and they absolutely do—then the “cheating” critique falls apart. We’re not cutting corners; we’re using tools to raise the floor of clinical excellence.

Moving Forward: Integration, Not Replacement

The future of medicine isn’t “AI vs. humans.” It’s deeply integrated workflows where:

- AI handles structure, organization, and documentation tasks

- Humans provide judgment, empathy, and complex decision-making

- The combination produces better outcomes than either alone

- Professional standards remain paramount

I’ve seen this in my own practice. Since implementing LLM-enhanced workflows:

- Documentation time: reduced by 40%

- Documentation quality: objectively improved (more comprehensive, more accurate)

- Cognitive fatigue: significantly decreased

- Patient satisfaction: improved (more face time during visits)

- Time with family: increased (less evening charting)

Is any of that “cheating”? Or is it professional evolution?

The Bottom Line

It’s time to stop conflating “using AI” with “cheating” and start making critical distinctions:

Cheating: Using AI to claim credit for intellectual work you didn’t engage with, or to bypass learning that’s necessary for professional development.

Professional Tool Use: Using AI to improve communication accuracy, reduce administrative burden, enhance patient outcomes, and focus cognitive energy where it matters most.

The legitimacy of clinical work doesn’t derive from whether AI was involved—it derives from whether the work is accurate, comprehensive, appropriately reviewed by a qualified professional, and serves patient welfare.

By those standards, properly deployed LLMs aren’t helping anyone cheat. They’re helping physicians practice better medicine.

I’m not asking you to blindly adopt AI. I’m asking you to distinguish between contexts where “doing it yourself” is the point (education, training, assessment) and contexts where “getting it right for the patient” is the point (clinical practice).

When you make that distinction, the path forward becomes obvious: LLMs aren’t cheating tools—they’re professional instruments that, when used appropriately, make us better physicians.

And that’s a paradigm shift worth embracing.

Where do you stand on the “cheating” question? How do you distinguish between appropriate and inappropriate uses of AI in clinical practice? Let’s discuss in the comments.

About the Author: Dr. Chukwuma Onyeije is a Maternal-Fetal Medicine specialist, Medical Director at Atlanta Perinatal Associates, and founder of CodeCraftMD, an AI-powered medical billing application. He writes about the intersection of medicine and technology at Doctors Who Code.